11th May 2018

We do not collect or store any information about visitors to this web site. I turned off Google Analytics and removed that code long ago (never used it). No access logs of IP addresses are kept at my cloud server provider (DigitalOcean); there are no logins, user accounts, or comments on this blog. We don't sell anything, we don't have anyone's email address to send them interesting emails, and finally we have no idea who or how many people read this site.

Happy 25th May 2018 Enforcement Day!

28th October 2017

The name of my company is C&A Docs.com with a thin space between ‘C&A’ and ‘Docs’; typographically it looks different. Here's a visual comparison:

| U+202F | C&A Docs.com | | (doesn't render correctly in many fonts) | hair space | C&A Docs.com | |   | C&A Docs.com | | | C&A Docs.com | | regular space | C&A Docs.com | | no space | C&Adocs.com | | (alternative embodiment) |

|---|

Of the alternatives, I think I like and (no space) the best. Here's what they look like on links; the spacing on links is consistent:

| hair space | C&A Docs.com | |   | C&A Docs.com | | | C&A Docs.com | | regular space | C&A Docs.com | | no space | C&Adocs.com | |

|---|

The best of the available candidates are: C&A Docs, Inc. (hair space), C&A Docs, Inc. (thin space), C&A Docs, Inc. ( ), or C&Adocs, Inc.

There is no visual difference between typewriter's and HTML's apostrophes, at least in my browser. I do like proper ‘quotation marks’ but they're a pain to encode so I don't do it often.

8th September 2017

I'm working a new project: turingwasright.com that's going to transform cross domain solutions.

Offering a unique convergence of very high reliability, very low cost, reduced certification needs, and international supply chain acceptability, it will replace some higher-cost alternatives in sensitive application areas while at the same time opening up new markets where expensive cross domain solutions could not previously be justified for cost reasons.

1st September 2017

HTTPS is forced by the web server now, obviating any need for

the JavaScript formerly used in the page header to redirect all

http:// connections to https://

automatically.

18th April 2017

My one-page résumé is here. My long CV is always here.

1st March 2016

A year in, we're changing the name of the company to C&Adocs, Inc. because it's shorter, doesn't require explaining to non-programmers, and is much easier to type.

Email if you want our new stickers!

31st December 2015

The git repository at https://github.com/jloughry/data

contains all supplemental data from Appendix B of my D.Phil. thesis

Security Test and Evaluation of Cross Domain Systems

(University of Oxford, 2014 or 2015) for replication.

Oxford University Research Archive (ORA)

also has them available.

26th November 2015

If we design weaknesses into our own system, some of the bad guys will use a different system (one without weaknesses) instead. Others will exploit the weaknesses in our system to hurt us.

There's little evidence that non-state actors use encryption much anyway; but if they want it, there are plenty of alternatives designed and implemented outside the USA that they could use instead. Much more likely, I think, is that the back door key would be compromised and exploited almost immediately, as happened with DVD copy protection and region codes.

The entire debate is a repeat of 1993, when a similar proposal was floated for an official cryptosystem (the Clipper chip) with a designed-in back door called the Law Enforcement Access Field, or LEAF. Way back in the 1970s, the first official data encryption standard (DES), used by banks to protect their wire transfers, was deliberately weakened. That time, it was done more cleverly: the fact that DES was weakened was obvious to mathematicians at the time, but it was also obvious that it could only be exploited by an entity with the computational resources of NSA. It was a safe bet at the time that no one else on the planet had NSA's computational resources. This time is different; they're proposing a weakness the control of which would be administrative, not controlled by the laws of physics. The key, literally, would be a key — steal a copy of that key, and you're in. (I've seen no actual technical proposal, only political calls for one; a sensible way to design such a thing would have multiple keys, for both accountability and damage control, but the principle stands: control of access to the keys would be administrative, not proof-of-work, and that's weak.)

It seems to me that if a system like this were mandated, and was therefore in widespread use, that there would be correspondingly frequent calls for use of the ‘back door key’ in investigations. Each time would be an opportunity for control of the key to be lost, compromised, stolen. A concrete example of this happened last year with ‘TSA approved’ luggage padlocks. A few years ago, laws were passed that said that airport inspectors were allowed to cut off locks on suitcases they wanted to inspect. Special padlocks could be bought by travellers who wanted to lock their suitcases; these ‘TSA approved’ locks could be opened by master keys that only airport inspectors possessed. The government was so proud of this technical solution that they put a photo on their web site of an officer holding a ring of the special keys. A high resolution photo. Within days, people had successfully copied the bitting patterns of all the keys from the photo. Complete sets of keys can now be purchased on EBay.

The government tells us that without controls on data encryption, police investigations will ‘go dark’. But that's contradicted by two facts: firstly, good encryption has been widely available for twenty years; prompted, in fact by the 1993 proposal, lots of really good free software was written, and lots of companies started selling it as well. (The only reason it's not widely used — except by nerds — is that it's a pain in the neck to use. Terrorists don't use it for the same reason.) Secondly, despite the existence for twenty years of very effective crypto software that's free for anyone to use (or easy to buy if you want a commercial product that comes with a warranty), law enforcement investigations have not gone dark; the number of wiretaps approved by the FISA court and the number of national security letters issued to librarians and network operators and email administrators — the number of which is public record — has increased dramatically since 9/11. They wouldn't be asking for approval for tens of thousands of wiretaps if wiretaps weren't working.

It's not even possible to argue this one as a Devil's advocate; I tried just now. No law I can think of would work completely. The most straightforward way to attempt it would be to employ ‘reverse entropy detectors’ on the Internet: i.e., any traffic not using approved encryption methods would be dropped. However, an easy countermeasure would be steganography — and that uses the laws of physics right back against you. It's a logical contradiction. The horse is out of the barn; locking the door won't work any more.

Takeaways:

Every assertion in the above paragraphs is supported by evidence; none of it is speculation.

16th October 2015

...the intelligence community (IC) solved the same information sharing problems—coöperating with partners you don't necessarily trust, privacy-preserving database queries, maintaining information integrity, anonymisation—decades ago, but no one on the outside knows about it. There are well-tested solutions in that place, proof against not just criminal or amateur hackers, but attackers with nation-state-level funding and resources.

The healthcare industry in the U.S. has an information sharing problem: individuals trust their doctors, but those same individuals don't trust health insurance companies. Doctors are forced to trust multiple health insurance companies—because doctors need to get paid—but insurance companies certainly don't trust one another (because they compete). Cancer researchers, on the other hand, have a desperate need to access everyone's healthcare records, for epidemiological studies—but they don't need or want personally identifiable information.

It's the exact same problem as faced by the CIA, U.S., and allied military forces in wartime: maybe the U.S. trust the U.K., who have a backchannel to Syria via the French; the Russians don't trust the U.S. but need to co-ordinate air strikes with American forces, and all of them pull news feed from CNN and Twitter but don't want to leak information back to the press. This is a totally solved problem. Healthcare could put the IC's solution straight to work.

21st May 2015

The following words should be struck out replaced by

something else.

6th May 2015

9th April 2015

What are the limits of what can be gotten away with in the UTF-8 context of a commercial web site like LinkedIn?

Limited support for multiple columns:

Column A Column B Column C

Surprisingly, the Unicode rendering functionality usually is complete enough that even things like RIGHT TO LEFT OVERRIDE work as expected. I wasn't able to bend it to animation, though.

ʇxǝʇ spɹɐʍʞɔɐq puɐ uʍop ǝpısdn

╔═╦╗

╠═╬╣ box drawing works well if enclosed in <pre> tags.

╚═╩╝

▂▃▄▅▆▇█ Tiny bar charts; i.e., Tufte's “sparklines” can be embedded, but font support for the necessary characters is problematic in browsers.

There is limited support for other graphics; e.g.,

╭╮

╰╯

25th March 2015

23rd March 2015

The entire site is now accessible via https://

and will quietly redirect from HTTP if entered that way.

24th February 2015

The software development and C&A consulting firm call-with-current-continuation.com, Inc.TM is open for business.

20th February 2015

|

| Figure 1. Cross domain systems are unique because they always go into an adversarial environment with mutually distrustful data owners. |

An idea I've been playing around with for a while involves characterising every allowable permutation of cross domain system (CDS) in collateral, SCI, and international accreditations. The approach has been peer reviewed and published (13th IEEE Conference on Technologies for Homeland Security, Boston: 2013); the presentation here is based on a talk I gave last year.

Incident response, analytically, looks a lot like an international coalition; in the case of major incident response, you have large organisations trying to negotiate their jurisdiction and announce their capabilities, in a short amount of time, to those who need it.

(The moral of the story is: don't overlook potential applications of CDS in ephemeral situations—but to do that, we'll need faster and less expensive A&A.)

Cross domain systems exist to interconnect...anything. They will talk any protocol needed to do it—but they focus on protecting systems from each other. That's what distinguishes them from firewalls; a firewall is usually thought of as protecting an organisation's resources from outside threats, but a cross domain system protects the rest of the world from the organisation as well.

(If that reminds you of protecting financial markets from rogue traders with access to inside information, it should.)

Certification and accreditation testing are my interest. (“Assessment and Authorisation” is the preferred terminology, but I'm using the older “C&A” nomenclature here.) Certification testing is done on a particular configuration of hardware and software called the ‘evaluated configuration’. Usually, an evaluated configuration is not a realistic field setup, because certification testers usually want all the features turned on so they can test them. Penetration testing is often done at this phase; penetration testers similarly demand root access and source code. Much like covert channel analysis, penetration testing has no natural end state; pen testers, given enough time, nearly always can come up with yet another layer of attack. Part of my research aims to enable the cross domain system developer some measure of control over the duration of certification testing, which otherwise will stretch out to consume all of the available time and money and resources.

The second type of testing done on cross domain systems happens at the end of installation, but before approval-to-operate. It's called accreditation, and it's done—every time—on a particular installation, in a particular place, with a particular configuration and particular users. Certification may be done once, or at most once a year; accreditation is done every time you sell one.

It pays to get good at accreditation, because you'll be doing it lots.

Accreditation testing is interesting because for cross domain systems it always involves more than one accreditor—accreditors represent the interests of data owners—who mistrust one another. Since there are multiple accreditors, and since each one thinks his or her data is the most important, this leads directly to multiple rounds of testing. Everyone knows what the best attacks are; there's only so many variations on how you can test that. If every one of those accreditors, for due diligence, performs the same tests over and over again, we don't learn anything new. That's the problem I set out to solve.

In my model, the following assumptions are required:

It's a minimal set of assumptions, not too unrealistic and not far off from the real world. First, we assume that all accreditors have just the right security clearance to do their jobs. If they work on Top Secret, they have a Top Secret clearance. If they only work on Confidential, then they will not have a Secret clearance, or a higher one. Everybody's cleared only to the level they need to be.

The second assumption is that there's a high side and a low side. That's often a matter of opinion, especially in international accreditations, but it simplifies the analysis. Besides, you can always partition any cross domain system with more than two inputs or outputs pairwise into a series of one-in-one-out cross domain systems. We used to have to do this all the time with pie-in-the-sky PL-5 customer requirements that we could compose out of one or more PL-4 approved components. PL-5 components are expensive; PL-4 components are not. So you can always reduce a cross domain problem, ultimately, to a high side and a low side.

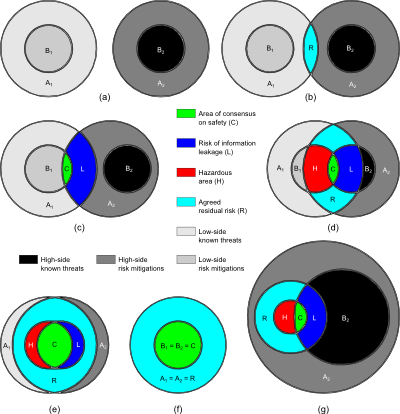

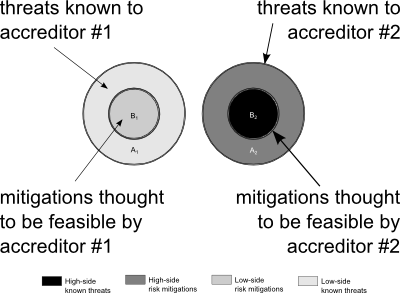

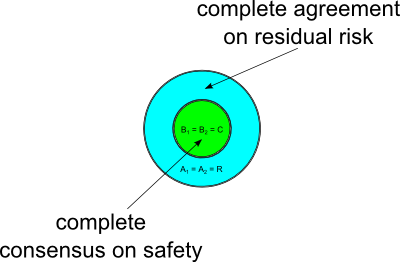

Consider an accreditor's view of the system (Figure 2, above). The larger circle represents those threats that would be desirable to mitigate. The smaller circle contains the subset of threats that it's feasible to mitigate—everything has a cost, and not every security control the accreditor wants, the accreditor is likely to get. The area between the circles is the residual risk.

|

| Figure 3. SCI-type cross domain system |

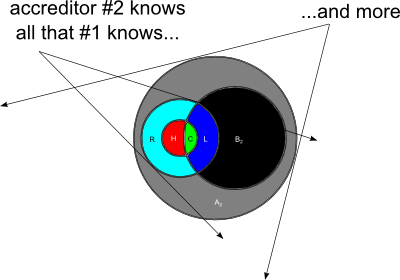

In Figure 3, to the right, we have a multi-level system. As soon as you have a multi-level system, you may have two accreditors, and in the most general case, shown here, they share no information.

To the right, then, is the essence of an SCI (or international) accreditation.

Accreditor 1 has a completely independent idea of what threats exist (A1) and what risk mitigations are possible (B1); similarly, Accreditor 2 is cognisant of a different set of risks (A2) that would be desirable to mitigate, and a smaller set (B2) of risks that are, from Accreditor 2's perspective, possible to mitigate. The sets are disjoint because we assume (in the most general case) that accreditors read into different compartments are unable or unwilling to share information.

The circles may overlap—of course they do!—everyone knows what the common attacks are, just as everyone knows what's affordable to mitigate. Those are your baseline security controls; what we're more interested in here are classified threats and classified threat mitigations.

|

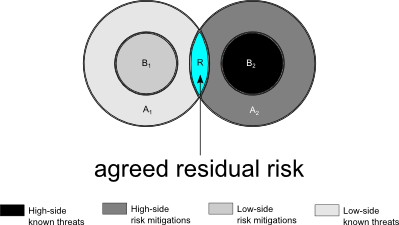

| Figure 4. Example of public information (area R) |

In Figure 4, to the left, the highlighted area R is the agreed residual risk that both accreditors share. These particular accreditors don't share much—the question of which is the ‘high side’ and which is the ‘low side’ is often a matter of opinion, especially in the SCI world—but they agree on a few known threats. Both accreditors concur that some risks, highlighted here in cyan, are not protect-able against, and are going to remain a threat to the system in production, as residual risk. It's outside the smaller areas (B1 and B2) that both accreditors know they can mitigate.

In the general sense, areas A1 and A2 probably overlap to a greater or lesser degree—since everyone knows about the major problems: bot-nets and Heartbleed and script kiddies and what-not—but it's hard to draw Venn diagrams in three dimensions.

This is the ideal SCI situation, reachable sometimes in practice. Public information, after all, need not be protected from disclosure.

|

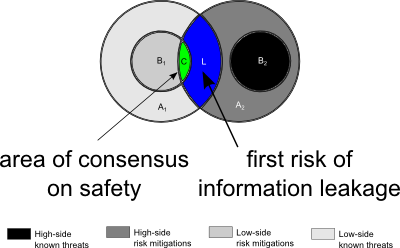

| Figure 5. Classified information (region L) at risk of information leakage |

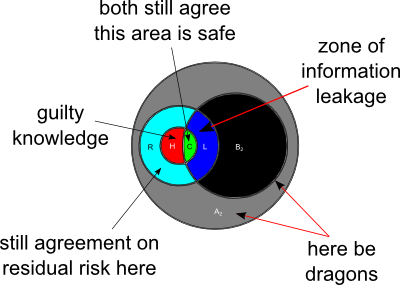

But here's where things begin to get interesting. As the two accreditors perform their security testing, both of them learn more about the system under test. The small area C, shown in green in Figure 5 to the right, is safe because Accreditor 1 has mitigated it with security controls. The second accreditor can legally be made aware (without loss of generality) that that the first has mitigated that portion of the threat, so Accreditor 2 doesn't have to worry about area C any more.

But there's a new risk of information leakage from high to low. Region L, shown in dark blue, represents classified information—that is, the existence of a threat that is classified higher than the clearance of the low-side accreditor. (Remember the first assumption: that accreditors are cleared only as high as they need to be, in adherence to the letter and the spirit of the law.)

The high side accreditor, on the right side of the figure, doesn't want to admit to the low side accreditor that these threats still can't properly be mitigated even with classified security controls. But it remains safe, because the high side accreditor isn't compelled to say anything, and it's safer to keep his or her mouth shut. There's a threat of information leakage, but no actual information leakage, and the existence of the classified threat (for which no mitigation is feasible on the high side) remains protected.

|

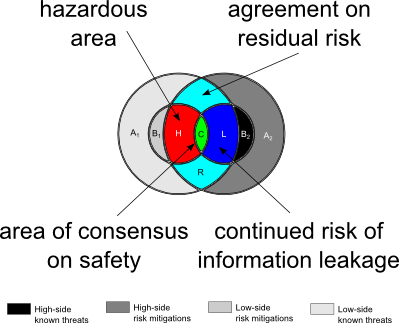

| Figure 6. The danger zone |

The red zone (Figure 6, to the left) emerges with the occurrence of forced communication for the first time. The high side accreditor—and remember, this is an international (or SCI) cross domain system, so the question of which side is the high side is a matter of opinion—knows something dangerous: he or she knows that some of the low-side security controls don't work. But that information is classified. The high side accreditor, though, is required personally to accept the risk for the correct operation of the entire cross domain system—not just part of it—if he or she accredits it.

Should the high-side accreditor refuse to accredit? To do otherwise would be to shoulder a known risk (that the true residual risk of the system is higher than generally believed); in that case the high-side accreditor runs the risk of going to jail. Unable to say precisely why he or she won't accredit—because that would be a violation of the oath to protect classified information—if he or she refuses to accredit, even if not saying why, the refusal in itself leaks the existence of a classified threat with no known mitigation.

Checkmate. (Note that the previous risk of leakage of the existence of classified threat mitigations, in dark blue, still exists, but that's a different kind of risk.)

Here, there is not only a risk of information leakage but personal risk to Accreditor 2; the red region H—remember, this is still an SCI accreditation—is believed by the low side accreditor to be adequately mitigated by security controls, but the second accreditor either doesn't know about the first's mitigation or doesn't believe it'll work.

|

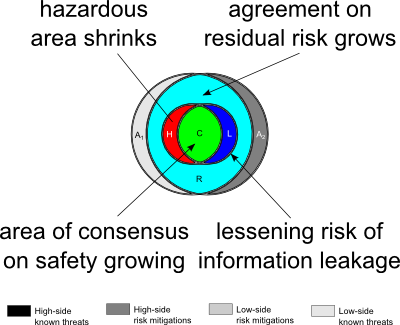

| Figure 7. As the situation approaches a purely collateral accreditation, the zone of hazardous overlap begins to shrink. |

As the two accreditors get more and more into each others' heads—facilitated by more overlap of their SCI compartments or international intelligence sharing agreements—the area of agreement over the true level of residual risk of the cross domain system grows (Figure 7, to the right). The risk of classified information leakage and personal risk to one or more accreditors diminishes as the situation approaches a pure collateral configuration.

In the degenerate situation, Figure 8 (below) there is complete agreement on residual risk (A1 = A2 = R), complete consensus on safety (B1 = B2 = C), and both accreditors know all. It's not very interesting.

|

| Figure 8. The degenerate case |

That was the abstract situation—one that models international or SCI accreditations well, in situations where there is no formal channel of communication between accreditors on different sides. That's not an uncommon problem for cross domain system developers to find themselves in; does the U.S. government ‘outrank’ Her Majesty's own need for information protection? The U.K. government doesn't think so.

So let's look at a simpler case, one occurring entirely inside the U.S. military or U.S. government itself (although not inside the intelligence community—they use SCI). Here we're connecting a Top Secret network to a Secret one. We can assume from this point on that the high side accreditor knows everything the low side accreditor knows. But the high side accreditor knows more—Top Secret things. Regardless, it ought to be a solvable problem, because there is no inaccessible knowledge. There is information asymmetry, but no hidden information this time. (See Figure 9, below and to the right.)

|

| Figure 9. Collateral CDS with different security clearances and forced communication |

Oh, no—it happened again! As security testing progresses, it can occur that the high side accreditor becomes aware of security controls that the low side accreditor has put in place, in good faith, but that do not work. I call this ‘guilty knowledge’ (see below). The other areas in the Venn diagrams, the dark grey and black areas, are classified threats and classified threat mitigations that the high side accreditor can't talk about, because they're legitimately classified. But the area in red represents forced communication.

|

| Figure 10. Summary of collateral CDS difficulties: information leakage, ‘guilty knowledge’, and higher-classified threats and mitigations |

There is a paradox in the Bell–LaPadula security model [1]. Should you follow the letter and the spirit of the law, clearing your accreditors only to the level they need to be cleared to do their jobs, then occasionally some desirable information flows are inhibited and some undesirable information flows are forced. If, on the other hand, you clear a pool of accreditors to Top Secret, and use them on jobs that don't require a Top Secret clearance all the time, then the information asymmetry disappears and the information leakage doesn't happen. It is a paradox, that loosening the security rules actually improves security.

We conclude, therefore, that in some reachable cases, that is, in the real world installation problems that cross domain system developers and installers sometimes encounter in the field, desirable information flows may be inhibited, or undesirable information flows may be forced. These represent information asymmetry in the accreditor-to-accreditor relationship and are logical consequences of the hierarchical security relationship. We can't prevent them—the possibility is inherent, as we have seen, in SCI, international, and collateral accreditations.

But can we detect and avoid the trap? The goal of the Policy Interaction layer in our notional future cross domain solution is to find and highlight the problematic configurations during testing and development in order to streamline the process and reduce the cost (in both time and dollars) of CDS deployments.

References:

[1] Loughry, J. Security Test and Evaluation of Cross Domain Systems. PhD thesis, University of Oxford, 2016.

7th February 2015

This is for Papa, a link to a proof-of-concept implementation of a custom 404 error page that provides a more useful link back to content.

22nd January 2015

I've spent a few years caring deeply about the security testing of cross domain systems, a technology originally developed by the military and intelligence community, but with clear applications to other information-sharing problems like those faced by the health care industry.

My latest résumé is always here.

26th December 2014

New security contact information page here.

9th December 2014; updated 11th and 14th December 2014 and 14th February 2015

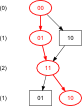

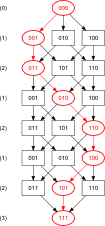

Optimal sequences for orders 1, 2, and 3 are easy to generate and not always unique; the optimal first order Banker's sequence, expressed in binary and starting from zero, is, of course, uniquely:

| Weight | Possible Bit Vectors | Graph1 |

|---|---|---|

| (0) | 0 |  |

| (1) | 1 |

1Example path shown in red; click graph to embiggen (SVG).

The optimal second order Banker's sequence is not unique; it can be either of:

| Weight | Possible Bit Vectors | Graph2 | |

|---|---|---|---|

| (0) | 00 | 00 |  |

| (1) | 01 | 10 | |

| (2) | 11 | 11 | |

| (1) | 10 | 10 | |

2Example path shown in red; click graph to embiggen (SVG).

A third order Banker's sequence admits even more solutions; here are some (but possibly not all) of them:

| Weight | Possible Bit Vectors | Graph3 | ||||

|---|---|---|---|---|---|---|

| (0) | 000 | 000 | 000 | 000 | 000 |  |

| (1) | 001 | 100 | 010 | 001 | 100 | |

| (2) | 011 | 110 | 011 | 101 | 101 | |

| (1) | 010 | 010 | 001 | 100 | 001 | |

| (2) | 110 | 011 | 101 | 110 | 011 | |

| (1) | 100 | 001 | 100 | 010 | 010 | |

| (2) | 101 | 101 | 110 | 011 | 110 | |

| (3) | 111 | 111 | 111 | 111 | 111 | |

3Example path shown in red; click graph to embiggen (SVG).

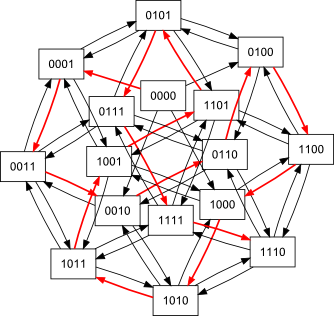

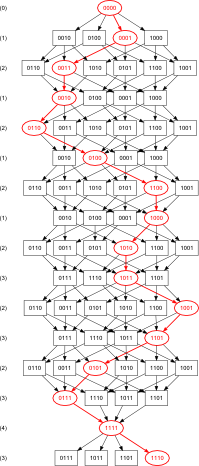

The fourth order Banker's sequence, surprisingly, is much trickier; this is the only known solution, so far, up to symmetry:4

|

or, equivalently, |  |

4Example path shown in red; click graphs to embiggen (SVG snowflake or optimal network).

It is not known whether this solution is unique, or if any optimal solutions exist for orders > 4. Development is under way of a programme to search exhaustively for all solutions in any n.

11th November 2014

I have intermittent network connectivity. What I wish for sometimes is a virtual TCP/IP that would fool network applications into working well enough, without hanging, until real network service returned, at which point it would silently catch up. Web pages would not load, obviously, but I could enter new Google calendar events, send emails, and commit changes to GitHub without having to wait and remember to do it later. As it is, I write notes to myself. I think the network stack could do something similar.

Clearly, new apps can be written to have the same behaviour, but this would enable old apps, without rewriting, to have the new features.

22nd October 2014

Copies of my published papers are hosted on this web site (you can find links to them on my résumé) or on the respective copyright holders' site. Where no freely available version is available (maybe it's behind a paywall), email me and I'll send you a copy.

20th August 2014

Key fingerprint:

2C3B 11A1 CE7C 5B1F 87BC F5D0 299D 7116 EDC2 ABE5

9th June 2014

The two examples of syntax-highlighted code below were done using two

different methods for implementing the indentation seen: the first uses

repeated entities (as many as needed), and the second was done

with div blocks that implement hanging

indents of the whole s-expression block automatically (as well

as some special inline div

tags that don't automatically put a newline after).

23rd February 2014

See Humans TXT: We Are People, Not Machines for information.

1st Jan 2014

I've been experimenting with CSS for syntax highlighting.

TO DO:

The first code example uses lots of span but no special div tags:

The second example uses the auto-indent div

method. Note that no character entities are needed

in the source:

It's a little disappointing that closing parentheses cannot be put inside the div blocks if we want to stack all the parens at the end, but if stacking the closing parens is not desired, then putting the closing paren after the closing /div keeps the indentation levels of the closing parens where they ought to be (i.e., don't put closing parens inside the closing /div.)

The third example was auto-generated by a syntax-highlighting parser:

(Note that a tab is automatically inserted before in-line comments; I may have to fix that.)

This didn't start out as a real blog; it started as a place for me to experiment with techniques I might want to use somewhere else.

All code here validates as HTML5 and CSS level 3 according to the W3C Markup Validation Service and CSS Validation Services.

Email me here:

Joe.Loughry@CnAdocs.com

PGP key:

2C3B 11A1 CE7C 5B1F 87BC F5D0 299D 7116 EDC2 ABE5

Build 189